AI Powered Search and Recommendation System

The search history analysis and user activity on the internet serves as the foundation for personalized recommendations that have become a powerful marketing tool for the eCommerce industry and online businesses. With the usage of artificial intelligence, online searching is also improving since it makes recommendations related to the user's visual preferences rather than product descriptions tailored to each customer's needs and preferences.

Overview

Modern search engines help us find the answer to any practical questions in the ever-growing ocean of internet data within a few seconds. How is it possible? What is behind such a quick search process? This blog will talk about artificial intelligence (AI) search technologies that quickly help satisfy the users' informational needs and provide the most appropriate recommendations.

AI helps recommendation engines make rapid and to-the-point recommendations customized to each customer's needs and preferences. With AI, online searching is getting better since it makes recommendations related to the users' visual preferences instead of product specifications.

Recommendation engines that utilize AI can become the alternatives of search fields since they help users find objects or content that they may not see differently. That is why today, recommendation engines play an essential role for websites such as Amazon, Facebook, YouTube, etc. Let's get a bit deeper and understand the working principles of recommendation engines and understand how they collect data and make recommendations.

Evolution of search engines

Archie, the first search engine, searched FTP sites to create an index of downloadable files. Due to limited space, only the listings were available, excluding the content for each location. It allowed users to look around the Internet. But it had limited functionalities, especially when compared to current search engines. The first search engine was an FTP where users could make simple requests for searching files, which they had to download to read the file.

As the total number of documents on the Internet has increased, the systems appeared to rank relevant pages. For the page ranking, search machines consider the keywords from a query, the frequency of these words, and their significance in the context of the document.

Necessary statistical quantity TF-IDF emerged:

TF (Term-frequency) — Ratio of the number of instances of a word to the total number of words in a document to evaluate the significance of a term within an individual record.

IDF (Inverse Document Frequency) — an inverse of the frequency with which a word occurs within a specific collection of documents such an approach reduces the weight of widely used keywords.

Google Search Engine emerged in 1998 with the innovative backlink ranking algorithm, PageRank[KB(2] . The very essence of this tool is that the importance of a page is assessed by the machine depending upon the number of hyperlinks associated with the carrier. Pages that have the highest number of backlinks are pushed to the top rank.

In 2013, Google created Word2Vec, a collection of models for semantic analysis. It presented the basis for a new artificial intelligence search technology, RankBrain, launched in 2015. This self-learning system can establish links between separate words, extract hidden semantic connections and understand the meaning of the text. Search engine algorithms work based on neural networks and deep learning that find pages matching the keywords and the purpose. The primary advantage of neural networks over traditional algorithms is that they are trained but not programmed. Technically, they can learn to detect complex dependencies between the input data and the output and simplify (like the human brain builds connections between neurons).

The fundamental task of all AI search techniques is to improve the understanding of complex wordy queries and provide the correct result even when the input information is incomplete or distorted.

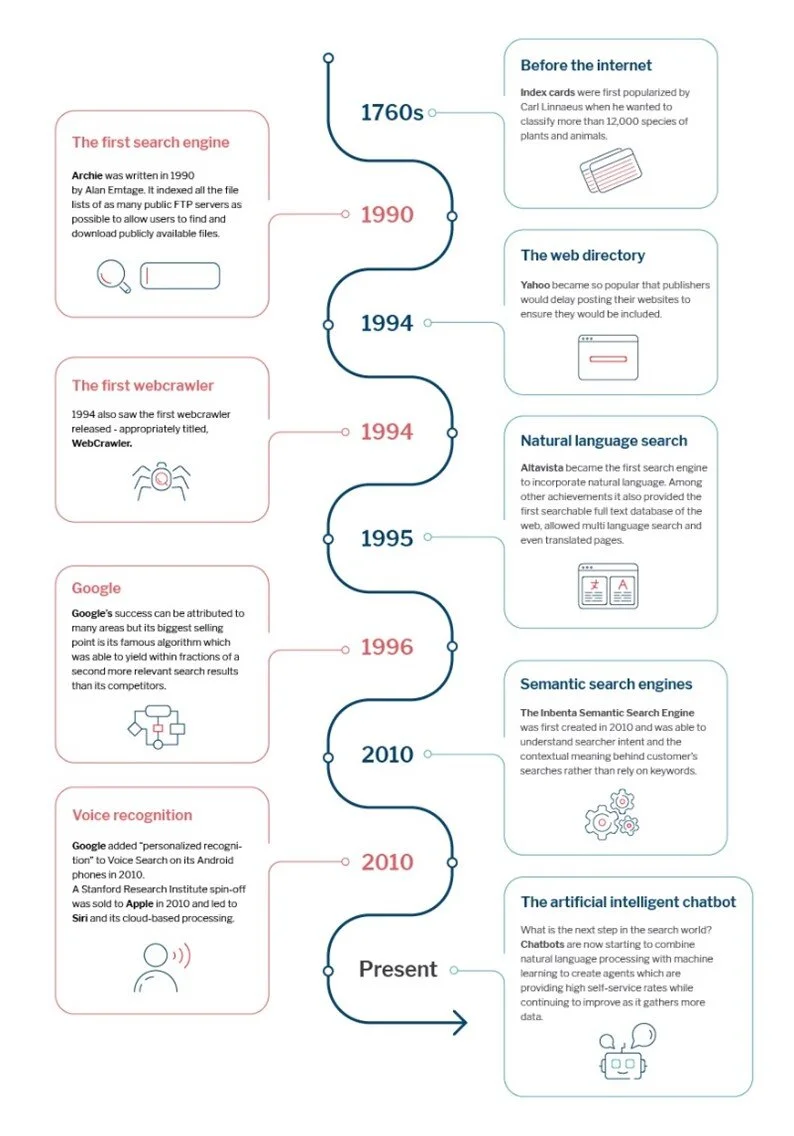

History of the search engine: From index cards to the AI chatbot

Modern search engines are pretty amazing – complex algorithms enable search engines to take your search query and return results that are usually accurate, presenting you with valuable information.

Search engine history started in 1990 with Archie, an FTP site hosting an index of downloadable directory listings. Search engines continued to be primitive directory listings until search engines developed to crawling and indexing websites, eventually creating algorithms to optimize relevancy.

How does AI search work?

When searching, AI usually refers to machine learning and natural language processing (NLP) modules that determine the intent of a search query to retrieve relevant information to the user.

NLP and ML

Understanding and adequately responding to the ways humans talk is a massive challenge for machines because of human speech's unstructured nature and diversity.

With NLP, computers can detect language patterns and identify relationships between words to understand users' interests. NLP is at the core of voice assistants like Alexa and Siri, so Google trained its AI to be more conversational by having the intelligence engine read 2,865 romance novels.

Machine learning is required for computer programs to automatically act on their understanding of human language and provide replies that improve over time. Machine learning is the science of making systems perform actions without explicitly being programmed using mathematical formulae. A machine analyses the data fed into the system and continuously finds patterns and connections using algorithms while performing tasks that would take weeks or even years for a human team.

Semantic search

A search for "best hand sanitizers" in 2021 gets you suggestions for products effective against COVID-19 without you needing to specify anything else, which is vastly different compared to what you would have received in 2019. Understanding a searcher's intent through the query's contextual meaning, rather than relying on the exact words a person enters, is the domain of semantic search as search engines understand the implications of queries and people discover the ease of using them, their expectations change. AI-powered semantic search with NLP and machine learning means that an investigation can function independently and return even more relevant results.

Top content search engines

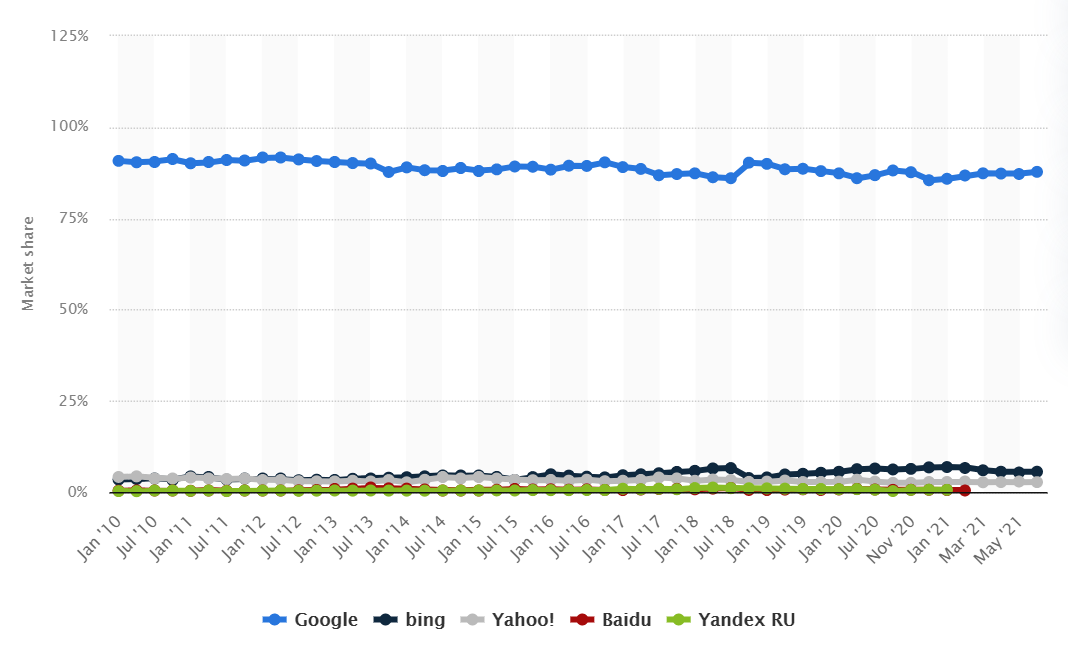

Google has been a leader in the market of search engines since its creation to this day.

Bing had 5.56 percent of the global search engine market in June 2021, compared to 87.76 % for market leader Google. During that period, Yahoo's market share was 2.71 percent.

Global market share of leading search engines

AI-based recommender systems

The analysis of search history and user activities on the Internet serves as the foundation for personal recommendations, which have become a powerful marketing tool for the eCommerce industry and online firms.

The recommendation systems don't use specific queries but instead analyze users' preferences to recommend goods or services of interest. For anticipating the needs of a particular customer, a recommender considers the following factors:

· Previously viewed pages

· Past purchases

· User's profile (where age, gender, profession, hobbies are indicated)

· Similar profiles of other users and their connections

· Geographic location

Therefore, a recommendation engine is a filtering system that prevents information overload and extracts to-the-point content tailored to each customer's needs.

What are the various types of recommendation systems?

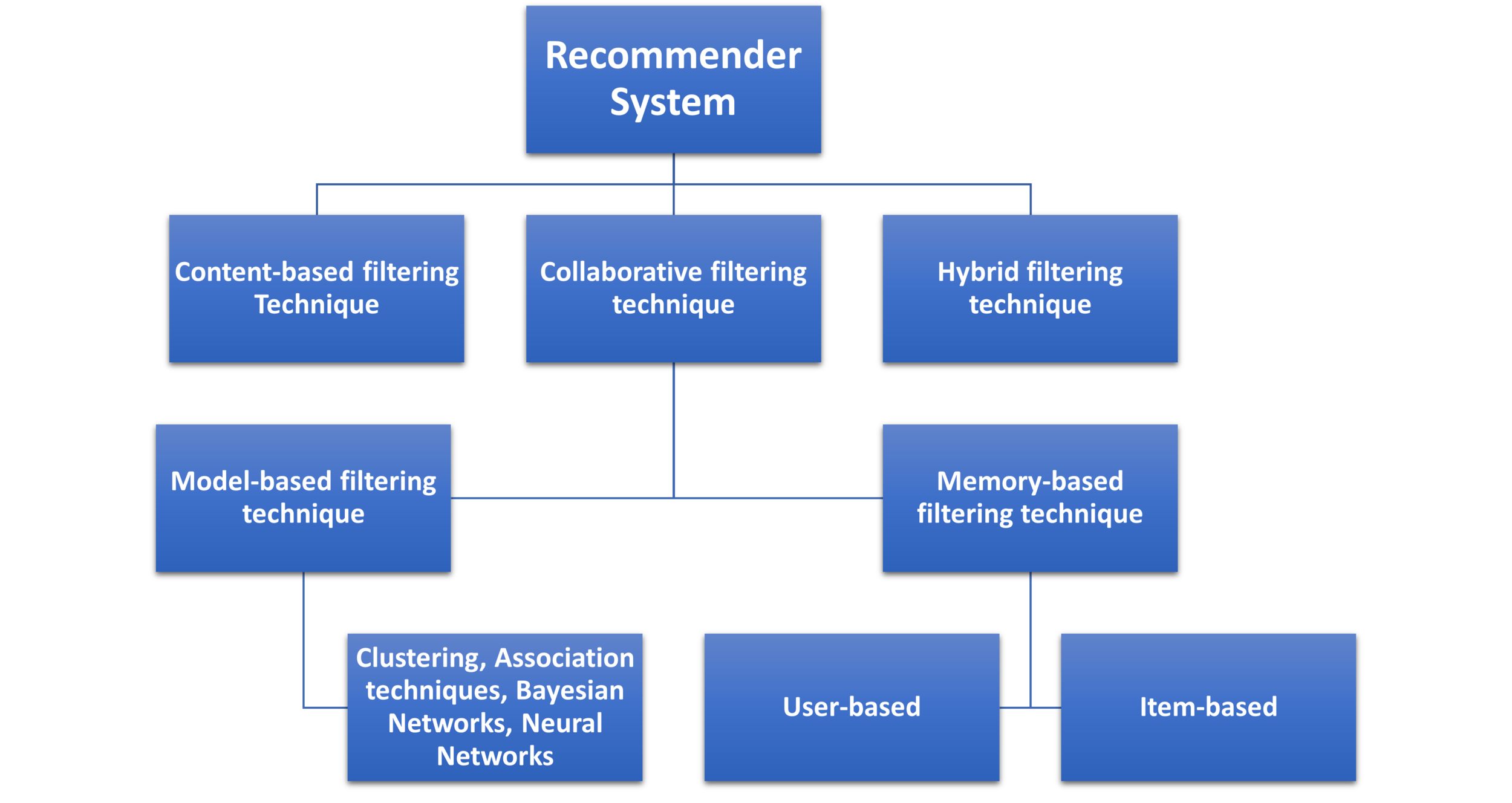

There are various types of recommendation systems, each using multiple techniques and approaches to generate a prediction. The implementation would largely depend on the use case (i.e., how it will address a business need), the scale of the project, and the amount and quality of data. Generally, there are content- and collaborative filtering-based recommendation systems, with the collaborative filtering, further split into memory- and model-based approaches.

Content-based recommendation systems use filters based on the explicit feedback, attributes, keywords, or descriptions of products or services liked by the user. The algorithm recommends items established upon what the user wanted or looking for currently.

Systems that make use of collaborative filtering have two sub-groups and uses different methods:

Model-based — Utilizes machine learning (ML) and involves extracting the information (e.g., ratings, feedback, reviews, etc.) from the information sets and using that to build an ML model

Memory-based — Analyses a data set to find or establish correlations and similarities between other users or items to make it to the recommendation.

What is content-based filtering?

Content-based recommendation systems work with the data the user provides, either through explicit or implicit feedback. As the user gives more inputs or takes more actions on the initial recommendations, the engine/system becomes more accurate.

What is collaborative filtering?

Collaborative filtering operates on the assumption that users who have agreed or liked the past are more likely to do the same shortly. As shown below, collaborative filtering involves analyzing data, typically laid out in a matrix containing a set of items and users with similar preferences who have indicated their responses. An essential concept in collaborative filtering is the utilization of the other users' feedback or rating to generate predictions for a specific user. These measures include detailed ratings (e.g., liking or disliking, rating on a scale of 1 to 10, etc.) or implicit feedback (e.g., viewing, adding to a wish list, time spent on the page, etc.).

Frameworks for developing AI, ML, DL solutions

To effectively create and deploy intelligent search and AI technologies, developers must choose the appropriate frameworks. Each framework serves a particular purpose, has its features and functions.

Microsoft Cognitive Toolkit (CNTK)

CNTK represents an open-source set of tools for the design and development of networks of different types. It makes working with vast amounts of data easier through deep learning and provides practical training models for voice, image, and handwriting recognition.

The best open-source library used for voice and image recognition is in text applications. The framework has been developed by Google and is written in C++ and Python. It is ideal for complex projects, e.g., regarding the creation of multi-layered neural networks.

Formed by Facebook, this tool is used primarily to train models quickly and efficiently. It has several ready-made trained models and modular parts that are easy to combine. The most important advantage is the transparent and straightforward model creation process.

A very scalable deep learning framework was created by Apache and is used by large companies and global web services mainly for speech and handwriting recognition, natural language processing (NLP), and forecasting.

Deeplearning4j is a commercial open-source platform written primarily in Java and Scala. The framework is suitable for image recognition, natural language processing, vulnerability arch, and text analysis

IP Landscape

Key market players (Top US assignee)

The figure below shows that Google (3138), Microsoft (2137), and IBM (1418) are the top three assignees in terms of patent filing in the AI-Powered Search and Recommendation System.

Top 10 US patent assignees for AI-powered search and recommendation system.

(Source - Lumenci)

Conclusion

Artificial Intelligence and big data analytics have been taking root in our everyday lives, generating significant transformations. Content search and recommendation practices are becoming more and more human-like with the help of AI algorithms.

It is beyond doubt that the search engine has gained more popularity and plays a significant role in the new digital era. Especially with artificial intelligence, in-the-moment recommendations are more widespread, which is time-efficient and pragmatic. Thanks to artificial intelligence, recommendation engines have enhanced their productivity, and they are based upon the customer's visual preferences rather than on a description of the items.

*Disclaimer: This report is based on information that is publicly available and is considered to be reliable. However, Lumenci cannot be held responsible for the accuracy or reliability of this data.

*Disclaimer: This report is based on information that is publicly available and is considered to be reliable. However, Lumenci cannot be held responsible for the accuracy or reliability of this data.

Author

Iman Ali

Associate at Lumenci

Iman Ali is an Associate at Lumenci with experience in Semiconductor and Wireless Communication. He holds a B. Tech degree in Electronics and Communications Engineering from Amity University. He is passionate to work on Problem statements and giving the best novelty based technological solutions. He likes to brainstorm, solve problems, learn new skills and listen to music in his spare time.